A majority of risk and compliance pros say employee use of generative artificial intelligence (AI) opens the door to business risk, adding that less than 10% of companies are prepared to mitigate internal threats associated with the emerging tech.

Those numbers come from a Riskonnect survey of 300 professionals weighing in on top 2023 internal threats to businesses.

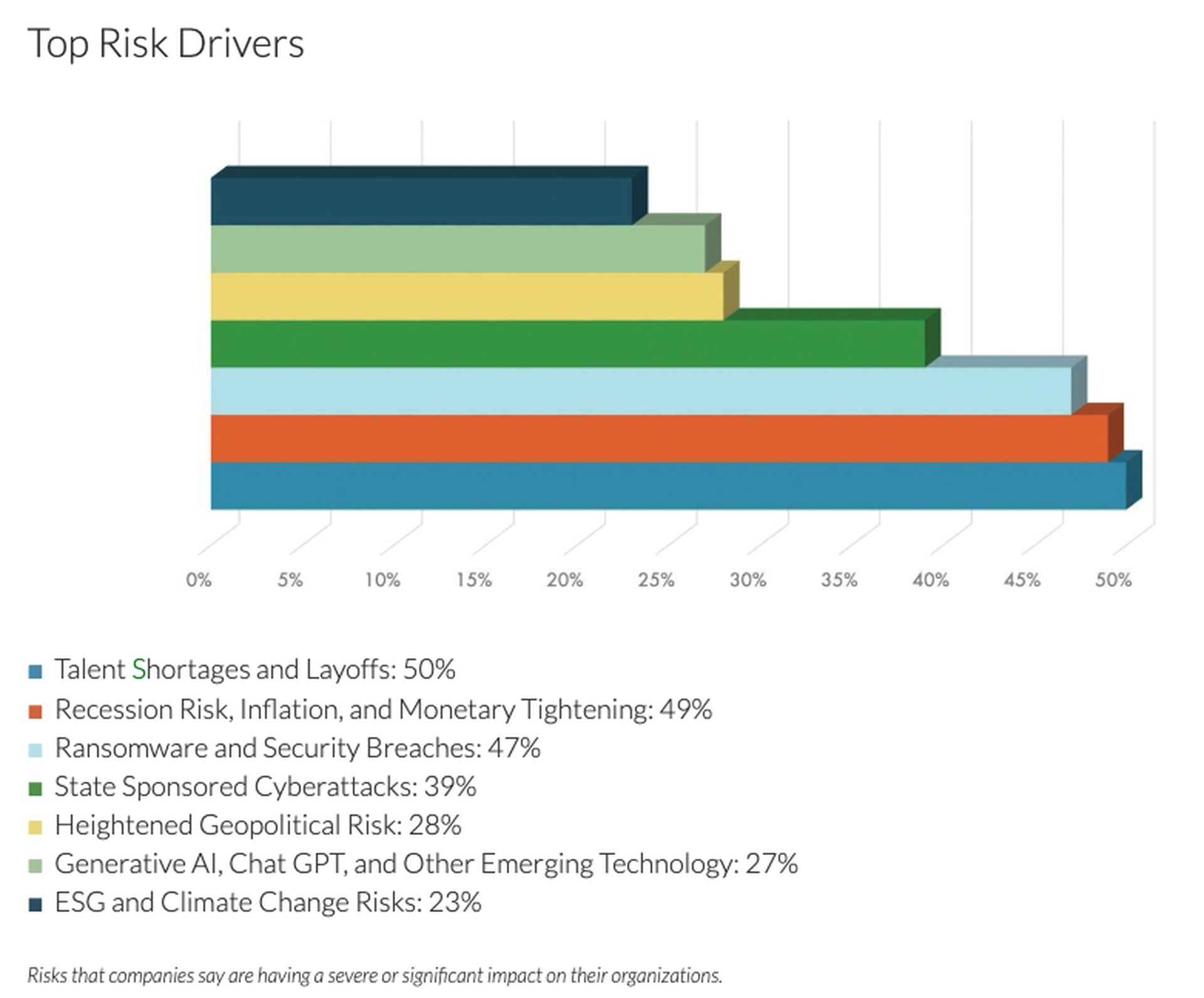

AI didn't crack the top four risks identified by survey respondents. Those included talent shortages and layoffs, recession risk, ransomware and security breaches and state-sponsored cyberattacks.

However, what could be seen as disconcerting is that AI is largely seen as a workload antidote to address shrinking staff. As noted in the survey, generative AI has the potential to automate work activities that absorb 60% to 70% of employees’ time.

According to 93% of respondents, generative AI tools such as OpenAI's ChatGPT and Google Bard create internal risk, with only 9% stating they are prepared to manage associated threats. Only 17% said they have formally trained or briefed their organizations on what those risks are and how to mitigate them.

“Generative AI is taking off at lightning speed... Our research shows that most companies have been slow to respond, which creates vulnerabilities across the enterprise,” said Riskonnect CEO Jim Wetekamp.

Top concerns cited for generative AI are data privacy and cyber issues (65%); decisions based on inaccurate information (60%); employee misuse and ethical risks (55%); and copyright and intellectual property risks (34%).

Orgs more concerned with AI's accuracy vs. security risks

A separate survey on generative AI released Oct. 17 by cybersecurity firm ExtraHop also showed that respondents were more concerned with getting inaccurate or nonsensical responses (40%), rather than issues surrounding security.

And similar to the Riskonnect survey respondents, the 1,200 security and IT leaders in the ExtraHop survey said they were concerned with employee and customer personally identifiable information (36%), followed by exposure of trade secrets (33%), and financial loss (25%).

The surveys seem to diverge on preparation for dealing with threats: 82% of respondents in the ExtraHop survey said they were somewhat or very confident their current security stack could protect them from generative AI threats.

Fewer than half of respondents, 46%, said their security policies spell out what company data is safe to share with AI tools, while 73% said employees use generative AI sometimes or frequently.