SAN FRANCISCO — There is a lot of hype about the possibilities of using AI, particularly large language models (LLMs), to speed up and streamline the process of writing code. There is also a lot of alarm over the use of LLMs by cyber threat actors to write and distribute malware.

However, the main focus of Google Threat Intelligence Strategist Vicente Diaz’s presentation on “How AI Is Changing the Malware Landscape” at the RSA Conference 2024 was not on how LLMs write code, but on how they can read and summarize code to uncover new insights for virus detection and analysis.

For more real-time RSAC coverage from SC Media please visit here.

In the Monday afternoon session, Diaz went over lessons learned since the introduction of VirusTotal Code Insight, which debuted at last year’s RSAC. Code Insight is powered by the Google Cloud Security AI Workbench and uses an LLM to produce natural language summaries of how a code sample uploaded to VirusTotal functions.

Rather than a binary response of “malicious” or “not malicious,” as one would receive from traditional antivirus software, Code Insight offers a qualitative description that lends context to how a script may be used either maliciously or legitimately. Diaz noted this ability is especially helpful for “gray cases,” such as determining whether a potentially benign installer is likely to be used as a dropper for malware.

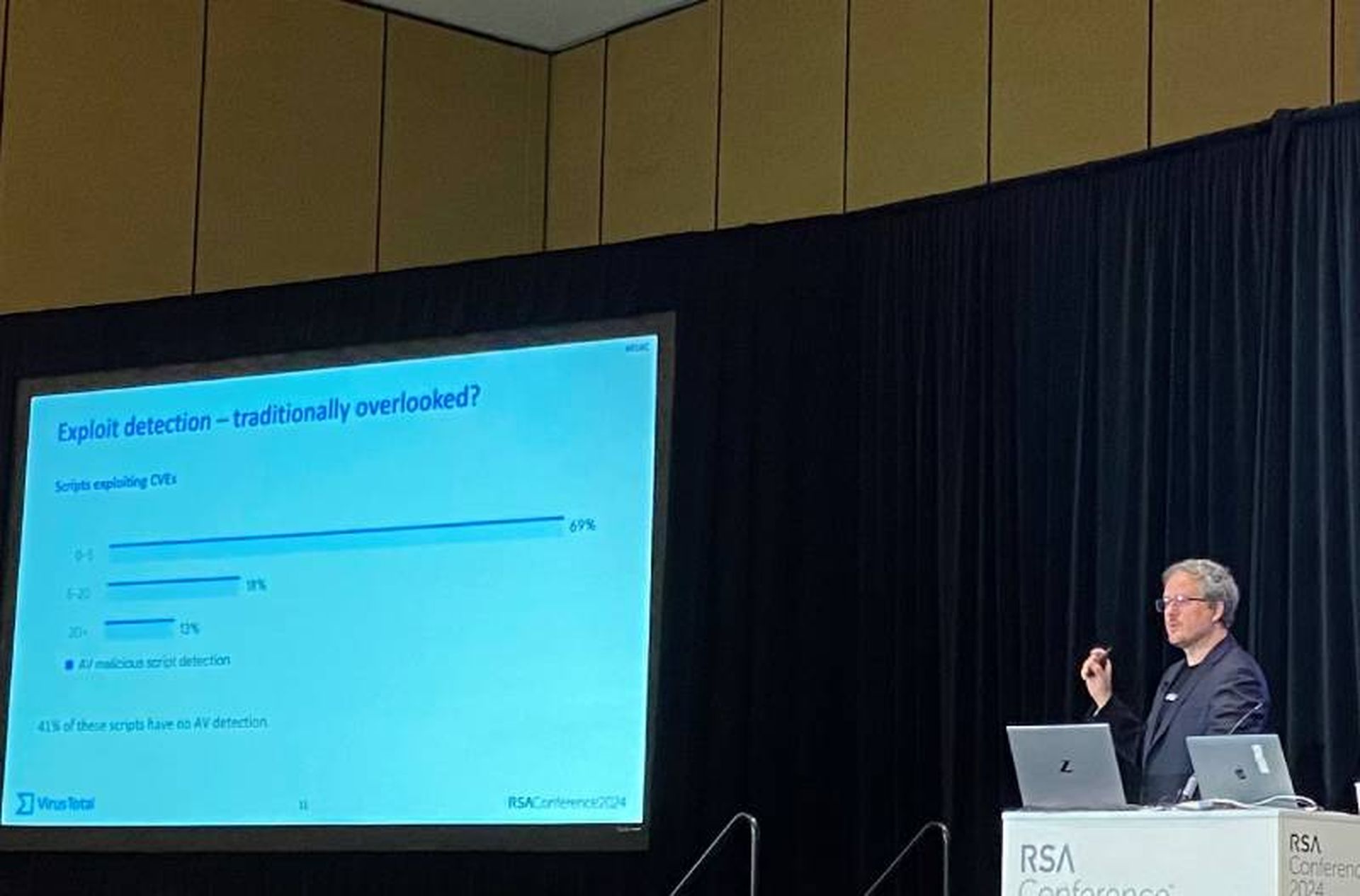

Diaz’s presentation summarizes insights from Code Insight analysis of more than 650,000 malware samples since its launch last April. In this time, the VirusTotal team made some impressive discoveries about the capabilities of the AI as compared with traditional antivirus detectors.

For example, Code Insight was able to detect CVE exploits that were commonly overlooked by traditional AV software – 41% of these exploit scripts detected by the LLM were not detected as malicious by AVs. Additionally, Code Insight excelled in its ability to deobfuscate malicious code, with traditional tools flagging obfuscation in only 5% of the cases flagged by the AI.

Due to this discrepancy in analysis of obfuscated code, the “verdicts” of the AI and AVs differed in more than 25% of cases involving PHP files, compared with less than 2% disagreement for Microsoft Office files and less than 4% disagreement for Powershell files.

Getting a “second opinion” on AV results from LLMs can save malware analysts time and aid in the discovery of malware that would ordinarily fly under the radar, said Diaz. When asked whether potential inaccurate descriptions or hallucinations output by the AI were a concern, Diaz told SC Media, “It’s way more stable than we thought.”

Diaz said that while not all the summaries generated by Code Insight have manually checked for accuracy due to the volume of samples analyzed, the LLM tends to give consistent outputs for the same piece of code regarding the most important factors such as indicators of compromise, and the team has yet to see any outputs that stood out “very weird.”

AI likely more helpful to malware analysts than malware authors

Diaz’s presentation also touched on the use of AI by malware authors, and even efforts by malware authors to fool AI tools like Code Insight. For example, one malware sample that contained a function to create “puppies,” while still flagged as malicious by Code Insight, caused the LLM to also note that “puppies are wonderful creatures.”

When it comes to the boogeyman of “AI-generated malware,” Diaz said that while it’s possible LLMs will “lower the bar” for malware creation, “the bar is pretty low” already. For most threat actors, it is not necessary to use AI to use or create malware, and AI has yet to make significant changes to the malware ecosystem other than potentially making it faster and easier to develop, customize or deploy.

When it comes to malicious use of AI, it is more likely to cause problems in the areas of social engineering and deepfake fraud than to spawn a new fully AI-generated malware family, Diaz said. On the other hand, cyber defenders and malware analysts are beginning to see significant advantages in the use of LLMs to better understand what malware is and how it works.

“For the first time, we have something that is comprehensive […] we have the full explanation,” Diaz said about VirusTotal Code Insight.

“In terms of saving time, this is absolutely great,” Diaz also added.