March 7th, 2017: A critical Struts vulnerability (CVE-2017-5638) is announced, and a patch is released.

March 9th, 2017: Equifax’s security sends out a notification internally, requiring all affected Struts software to apply the patch within 48 hours.

March 15th, 2017: The security team scan for the Struts vulnerability and find nothing.

May 13th, 2017: Attackers break into Equifax using CVE-2017-5638.

This is how the story of Equifax’s massive 2017 breach began. The story you remember might have sounded quite different. Part of the reason for this is that the details of Equifax’s breach didn’t come out until December 2018 - 16 months after the incident was publicly announced. By that time, we had all moved on. The product of a 14-month investigation by a House Oversight Committee resulted in one of the most detailed incident reports our industry has ever seen, and many organizations aren’t aware it exists.

That’s a problem.

From the beginning, we knew this particular Struts 2 vulnerability to be the entry point for the Equifax attackers but didn’t know much else. It was assumed that Equifax was slow to patch and attackers beat them to the punch. This was a perfectly reasonable assumption - it’s a well-studied fact that most organizations are slow to patch. It is still common for organizations to set patching goals in terms of weeks or months, not hours or days.

It also didn’t help when Equifax’s CEO, Richard Smith, blamed the incident on a single IT employee for failing to update Struts.

What actually happened?

It turns out, Equifax didn’t get hacked because they neglected to patch Struts. The primary reason they got hacked is that they failed to locate Struts in their own environment. As evidenced by the timeline above, this was clearly not a case of IT moving too slowly, or not taking the issue seriously. Equifax’s playbook seemed solid. Equifax’s people and processes, however, lacked the kind of maturity that comes from the hard-won experience of many, many failures.

How could an organization be expected to experience many failures and avoid breaches? It's all about simulating attacks and practicing, which we'll get into later.

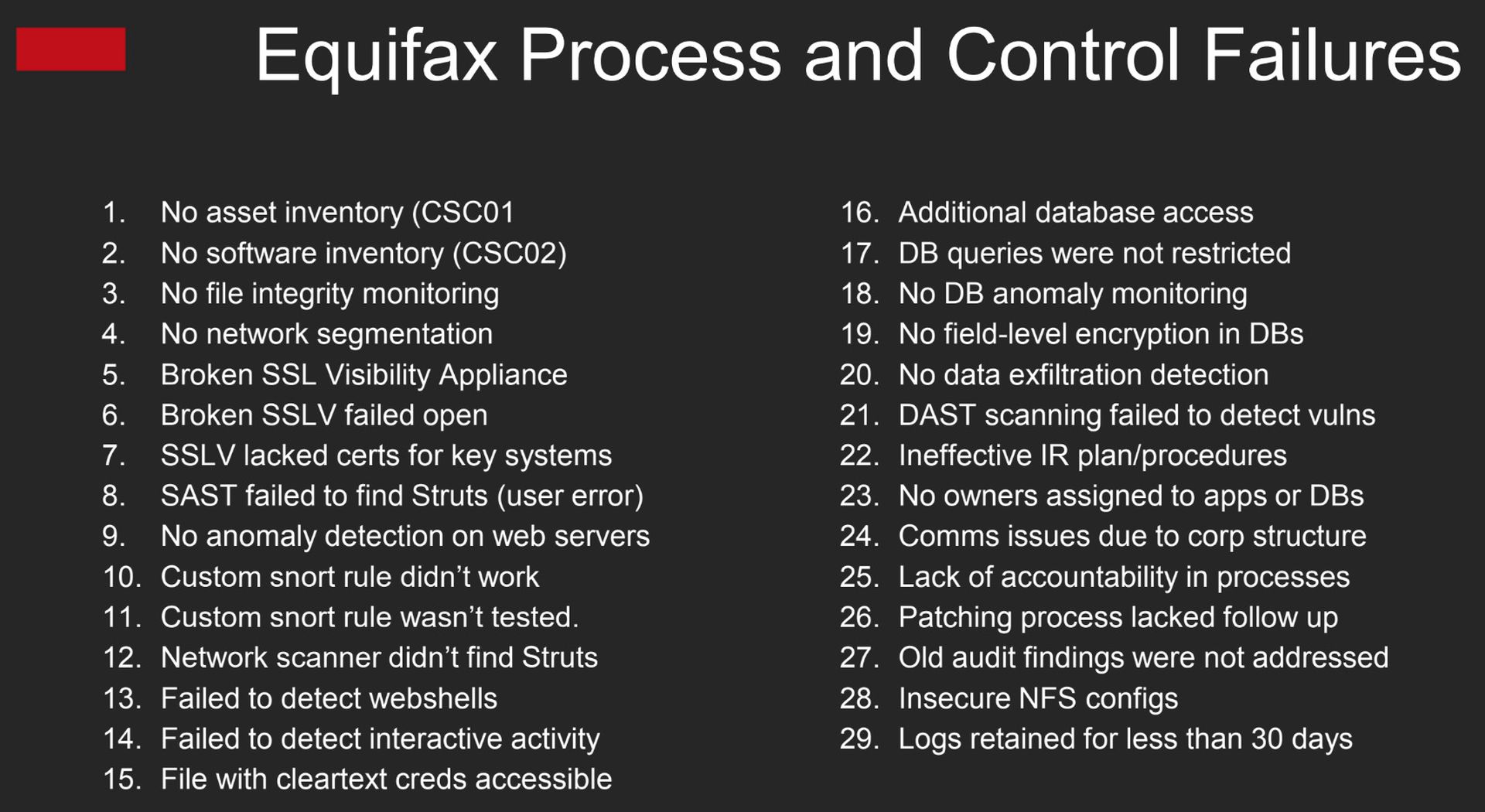

Specifically, a large number of avoidable issues contributed to this breach. First and most significantly, the team at Equifax didn’t know how to quickly locate small JAR files in a large corporate environment (the situation we find ourselves in again with Log4j). CEO Richard Smith blamed this on a 'faulty scanner', but the (DAST) scanner he refers to was not the right tool for the job. Another scanner (SAST) was used incorrectly by an employee and thus failed to locate Struts.

They never should have been scanning for Struts in the first place. The asset owners responsible for the affected systems should have known these systems relied on Struts. No one had been assigned responsibility for the apps and databases that got hacked. Ensuring all assets are assigned an owner is a common challenge, especially in organizations rife with tech debt.

The CIS Critical Security Controls begins with asset inventory for good reason. The second critical control, at the most basic level, recommends building and maintaining an inventory of software. Somewhat related, the concept of Software Bills of Materials (SBOMs) has been gaining in popularity, even gaining a few mentions in Section 4 of the 2021 Cybersecurity Executive Order. Maintaining an accurate software inventory isn't easy, but ultimately, it is the ideal solution for this sort of problem. Who should maintain it? Again, the asset owners.

Building a custom snort rule was a solid move, but the rule itself was faulty. No one thought to test it before deploying it to production detection systems.

A TLS inspection system was left partially inoperable for over 18 months, due to expired certificates.

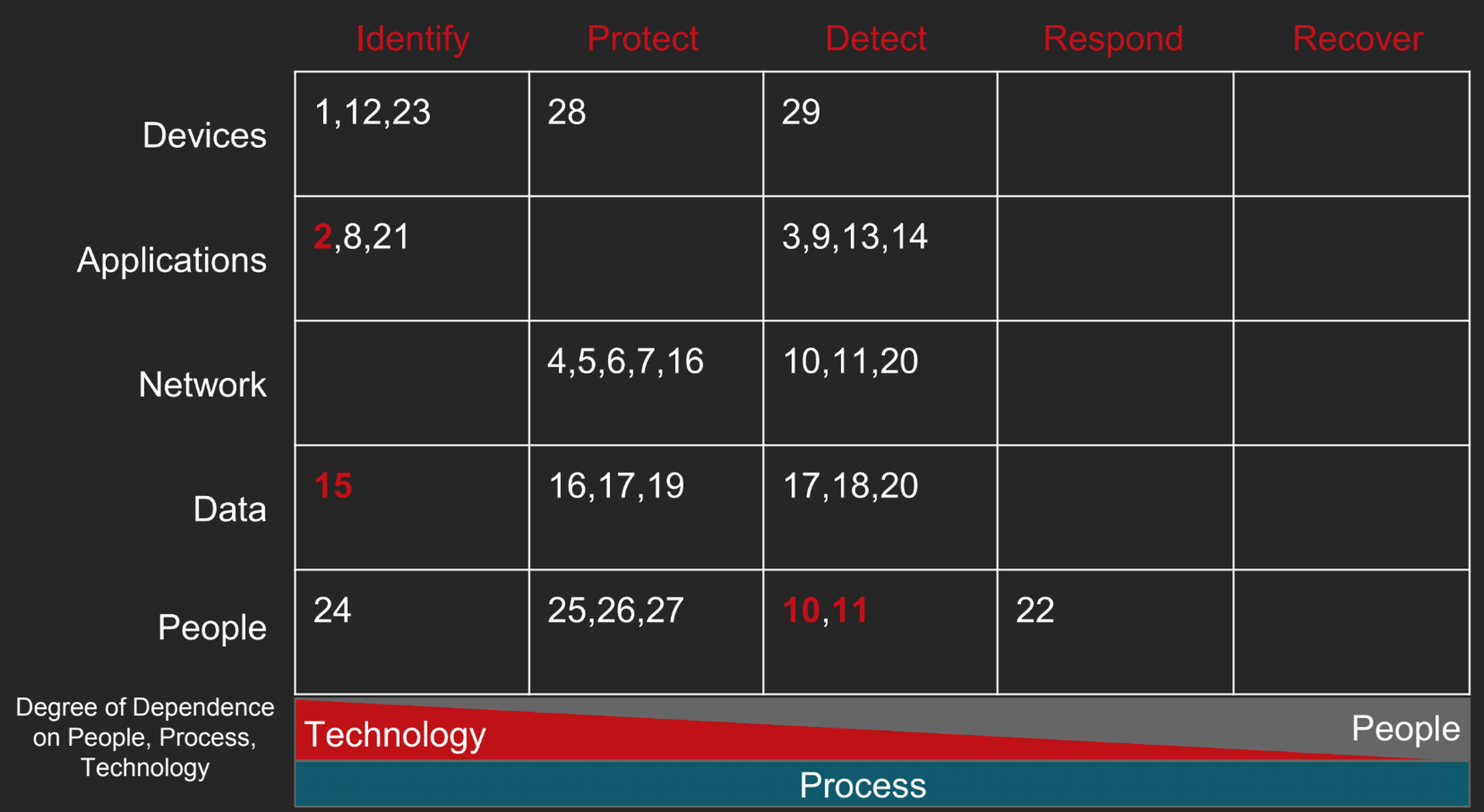

In total, there were over 30 control failures that allowed the breach to happen and prevented Equifax from detecting and stopping it. None of them were technical control failures and only one or two could be blamed on individuals. The vast majority were process-related.

Equifax had a solid playbook but it had never seen battle. Equifax had the people but didn't prepare them. It had budget and tools but didn't know how to use them. If any training or testing for a scenario like this had been done, it wasn’t effective. Equifax was tossed on the field with a professional team and wasn’t even sure of the rules. It didn’t seem like they had much of a chance.

What does all this have to do with Log4Shell?

Equifax’s breach was the perfect opportunity to prepare for future vulnerabilities like Log4Shell. Not enough organizations seized the opportunity to learn from it.

In 2017, the Equifax breach demonstrated how devastating a vulnerability in a widely-used programming library could be when IT doesn’t have a good handle on software asset management. If it’s not already painfully transparent with the mad rush created by Log4Shell, most organizations don’t have a good handle on software asset management.

Struts and Log4j are both Java libraries. Java applications have a tendency to outlive their creators. They often become poorly understood legacy applications - tech debt that no one understands, as the original developers and architects cycle out of the organization.

Many will argue that Java libraries are difficult to find and take time to replace. This is only true because companies haven't prioritized making Java libraries quick to find and easy to replace. It's an issue as old as software development itself - speed and cost are critical factors - ease of maintenance is not. Will the original decision-makers be around when the tech debt bill comes due? Probably not.

This is an industry-wide incident. It's hard to recall a single software vulnerability that affected this many otherwise unrelated organizations and software products. The lessons we're learning as an industry from Log4Shell are the same we should have already learned from the Equifax Breach. If the Equifax breach timeline is any indication, it could be a long time before we really understand the full extent that criminals and state actors were able to benefit from Log4Shell.

Lessons for the future

Share, learn, and test. These are three important lessons that can benefit every organization’s cybersecurity program and should be baked into every organization's culture.

Sharing

Sharing is the toughest challenge by far. It can be difficult to get two groups within an organization to share resources or information. Now we're asking them to share their worst, most embarrassing mistakes with potential competitors. Voluntarily.

Thousands of breaches occur every year, but it is extremely rare for incident details to be made public - Equifax is a notable exception. Every other industry concerned with safety publicly shares failures. Aviation incidents. Ship collisions. Highway accidents. Structural engineering failures. Cybersecurity is different for a few reasons. We’re not as heavily regulated, security incidents aren’t causing loss of life on any kind of large scale (yet), and organizations (and their political advocates) are pushing back on the idea.

We need to know how security failures happen. Malware details and attacker IP addresses aren’t enough. With the limited data we have now, it’s clear that process failures are common. Frustratingly, breached organizations often had the necessary budget, staff, and tools. Why weren’t these enough? They either lacked the experience to build robust processes or the focus to maintain them.

Learning

Learning from the lessons that breached organizations have learned is critical. Often, the knee-jerk response to widespread cybersecurity issues is a cry for “more budget, more people!” When we have looked more closely, we find that there’s a bottomless pit for cybersecurity budget and staff that will never reduce risk or the likelihood of a breach occurring. In fact, an argument can be made that, without careful planning and direction, additional budget and staff can make the problem considerably worse.

When we study breaches, we find that IT debt is sometimes too much for the security staff to overcome. Security operations teams may have more security products than they can manage and maintain. There’s a difference between knowing how to use a product and knowing how to leverage it effectively for a specific purpose. A chef may use a knife, day in and day out, but is that experience preparing them for a knife fight? This brings us to the importance of testing.

Testing and Training

Testing and training are possibly the most critical practices defenders need to adopt. Security budget can buy tools and hire staff, but the investment isn’t realized unless the staff can use the tools effectively. The observation sounds so painfully obvious, some might think it shouldn’t need to be stated. Jump back a step to the “learning” lesson, however, and nearly every breach we have significant details for points to the same issues: a lack of practice, testing, awareness, and training.

In the case of Equifax, they created and deployed a custom Snort rule to detect an attack against Struts. No one thought to test it. If they had, they would have found that it didn’t work. Equifax’s IDS system actually was capable of detecting the attack but had been left in a broken state for over 18 months. Within minutes of fixing it, staff spotted the attack. Sadly, it was detected too late to prevent the damage we’re all familiar with - detailed personal and credit information belonging to 147 million adults in the US and the UK was stolen.

Let’s think about this problem in a different way for a moment. If incident response was a sport, how prepared would your players be? The PCI DSS requires one incident response test per year. Imagine how a team that practices once a year is going to perform. Team sports offer not just a useful metaphor, but a useful mindset for security teams and incident responders.

Preparing for 2022

In the midst of an incident in 2022, no one should be surprised, unprepared, or at a loss for what to do next. This wasn’t always the case, but we’re truly living in a time when a breach could happen to any organization at any time. We’ve moved well past the point where companies could reasonably argue that they’re not a target because they don’t store PII or payment data. Any organization with money is a potential target for cyberattacks. Just as any retail organization plans and budgets for fraud and shrink, the need to train and prepare for cyberattacks and extortion situations is simply a part of running a business.

The quality of the tools you own matter a lot less than the quality of your teamwork. In 2022, learn how bad things happen to other organizations. Prepare for them. Train to handle them as if they’re actually happening. Train as a team, weekly if you can. Monthly if you can’t. There will be tough questions. Do we pay the ransom? Do we tell the public what’s happening? Do we shut down the affected servers? Be prepared, know the answers before they come, and you’ll sleep more easily at night.

Ultimately, my hope is that, in 2022, we will stop doing what makes us feel more secure and start studying and learning about what will actually make us more secure.