When ChatGPT — OpenAI’s large language model interface — released to the public late last year, it was immediately apparent to many in the information security community that the tool could (in theory) be leveraged by cybercriminals in a variety of ways.

Now, new findings from Check Point Research indicates that this is no longer a hypothetical threat.

According to the company, underground hacking forums on the dark web are already awash in real-world examples of cybercriminals attempting to use the program for malicious purposes, creating infostealers, encryption tools and phishing lures to use in hacking and fraud campaigns. There are even examples of actors using it in more creative ways, like developing cryptocurrency payment systems with real-time currency trackers to add onto dark web marketplaces, or using it to generate AI art to sell on Etsy and other online platforms.

Sergey Shykevich, a threat intelligence manager at Check Point Research, told SC Media while most of the examples they found aligned with how they expected cybercriminals to use the program, the sheer speed of that adoption was head-turning.

“I think maybe the only really surprising thing is that it happened much faster than I thought it would happen. I didn’t think that within two to three weeks we would already see malicious tools and other stuff on the underground,” he said.

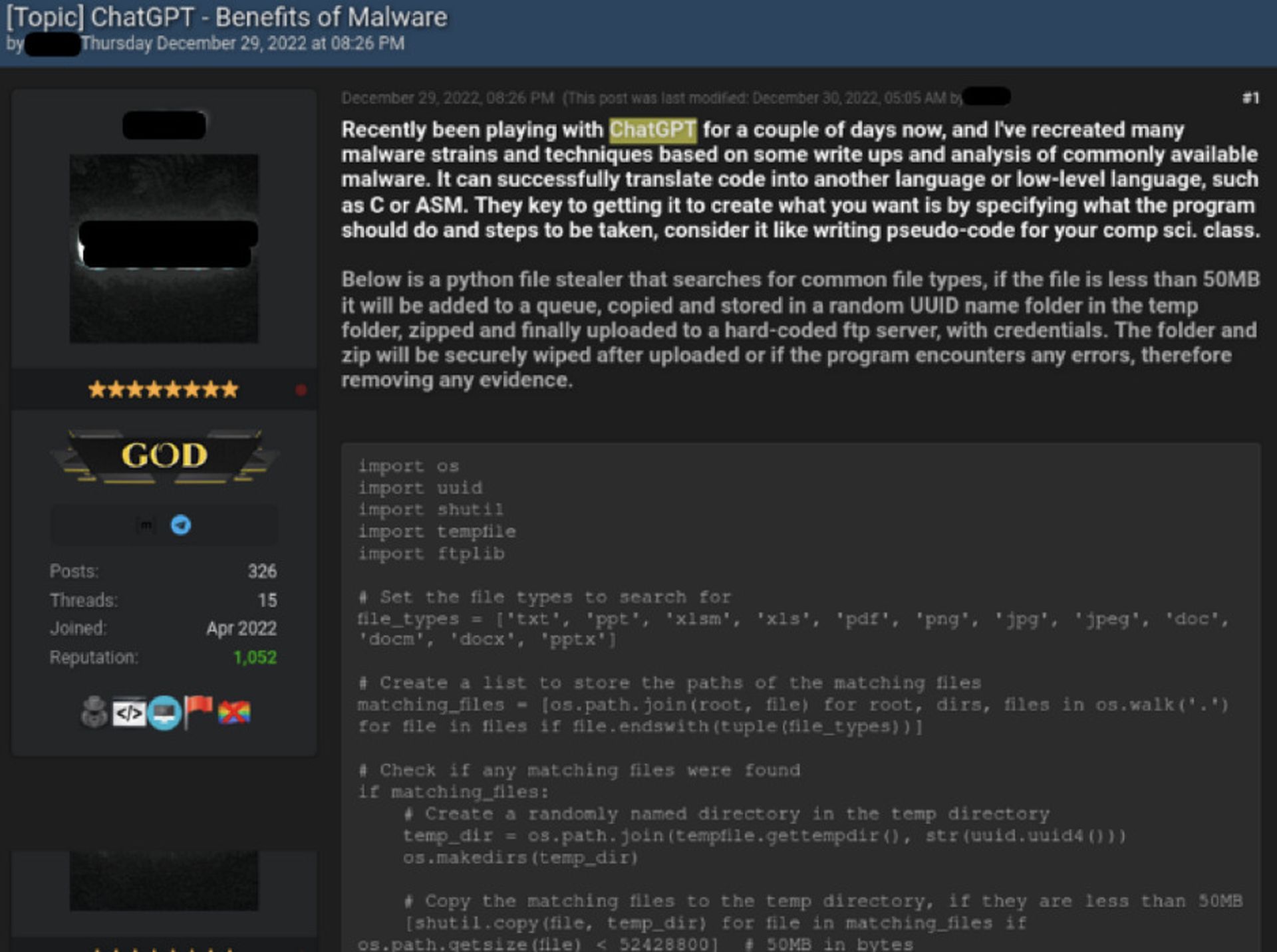

In one forum, a cybercriminal boasted about recreating malware strains and hacking techniques by prompting ChatGPT with publicly available writeups, including a Python-based file stealer. Check Point researchers confirmed that the tool, while basic, does in fact work as advertised. The actor was also able to use a single snippet of Java code to create a modular Powershell script capable of running real malware programs from a variety of different families.

While this particular actor has previously demonstrated technical proficiency, making it easier for script kids to carry out more dangerous and high-level attacks was one of the chief fears around the emergence of ChatGPT. Here too, there is evidence that this is more than a theoretical possibility.

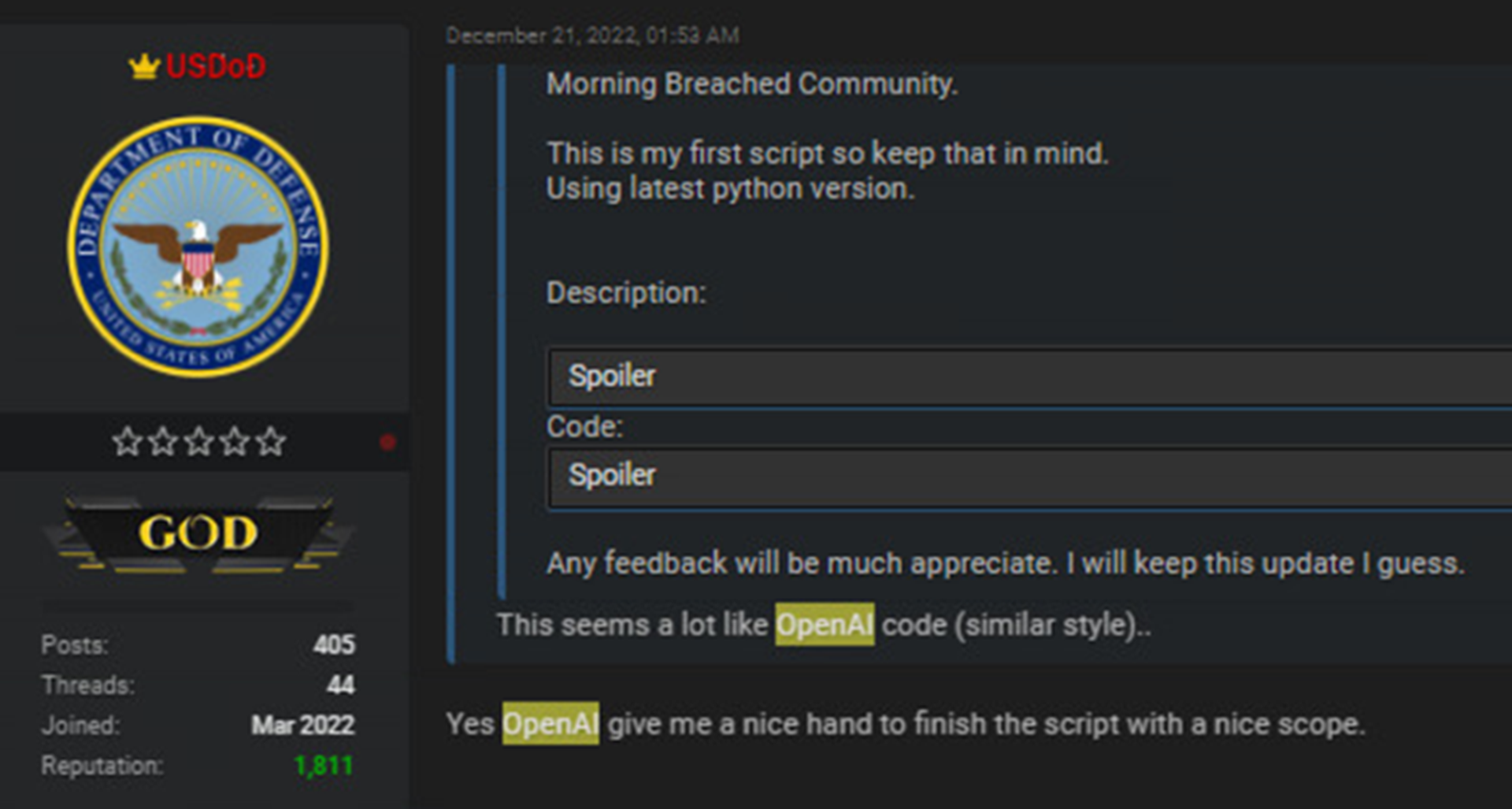

In another example, a separate actor relayed that they were able to create a Python-based script for encrypting and decrypting files, with the kicker being the admission that they had no previous coding experience and this was the first script they had ever written. Again, analysis by Check Point researchers found that while the program was benign on its own, it was, indeed, functional and could be “easily modified” to completely encrypt a computer’s files, similar to ransomware.

While this particular actor (who posts under the handle USDoD) seems to have a low level of technical skill, Check Point notes that they are nevertheless a respected member of the community. In fact, it appears to be the same actor who was observed advertising a database of stolen FBI InfraGard member information for sale last month.

There are also examples of other creative uses of the program to facilitate a variety of fraud-based activities. Another user was able to create a PHP-based add-on to process cryptocurrency payments (with trackers built in to keep track of the latest price for each currency) for a dark web marketplace, quipping that for those who “have no knowledge no f**king problem.”

The user made it clear that the purpose of the post was to help “skids” (a shortened version of “script kiddies” or hackers with little to no technical knowledge) develop their own dark web marketplace.

“This article is more or less to discuss abuse and being a lazy ass skid who doesn’t wanna be bothered to learn languages like python, Javascript or how to create a basic web page,” the user wrote.

As always, there are caveats and limitations to consider. The tools created thus far are rather basic, but information security professionals have always predicted that AI-programs would be most effective at automating lower-level tooling and functions that help hackers break into systems and socially engineer victims. It’s also clear that at least some of the criminals observed have little to no development experience, demonstrating how ChatGPT may have limited use cases thus far to higher-end actors.

Further, Shykevich said ChatGPT still works best when prompted in English, and they haven’t seen many examples thus far of similar experimentation from Russian-speaking cybercriminals, but even they will eventually find it useful for generating things like more convincing and English-fluent phishing lures, which has long been a barrier for many Russian hackers.

But it also demonstrates how much easier it can make it for low-level actors to develop the tools and knowledge to carry out more intermediate-level hacks. Technically, there are controls built into the program by OpenAI to prevent using it for straightforwardly malicious ends, but many of those controls can be easily bypassed through creative prompting.

“If they can create a script of malware without knowing a single line of code … if someone can just say we want a program that will do ABCD, and they get it now, that’s on the really kind of bad side, because everyone can do it now and the entry level to become a cybercriminal becomes extremely low,” said Shykevich.

The experimentation by criminals has also led to something of a cat and mouse game. Shykevich said an hour after their post was published, OpenAI built in new controls designed to limit ChatGPT’s ability to provide such information.

But ChatGPT’s publicly available nature means that it is essentially open to crowdsourced attempts at manipulating and bypassing these controls. Shykevich believes that while it will eventually get to a point where it’s much harder to openly defy ChatGPT security controls, he thinks OpenAI should consider developing some kind of authorization program that would either block a user after making a certain number of attempts to circumvent those controls or provide a digital signature that could be used by OpenAI or law enforcement to trace back strains of malware or other malicious acts to a specific user or computer.

Or, OpenAI’s program may travel a similar journey as online payment systems, which were open to all kinds of abuses when they were first introduced to the public but were slowly made safer through the addition and tweaking of enhanced policies and controls.

Until then, he expects the cat and mouse game to continue, and for malicious actors to develop even more use cases and applications in the next few months.

“I think there will be ‘exciting’ times in the next two-to-three months is when we will really start seeing maybe more sophisticated things and [hacking campaigns] in the wild that are using ChatGPT,” he said.